If you have used Spark in Azure Synapse, prepare to be pleasantly surprised with the compute experience in Microsoft Fabric as Spark compute starts a lot faster because the underlying technology has changed. The Data Engineering and Data Science Fabric experiences include a managed Spark compute, which like previous Spark compute charges you when it is in use. The difference is the nodes are reserved for you, rather than allocated when you start the compute which results in compute starting in 30 seconds or less versus the 4 minutes of waiting it takes for Azure Synapse compute to start. If you have different capacity needs that a default managed Spark compute will not provide, you can always create a custom pool. Custom pools are created in a specific workspace, so you will need Administrator permissions on the workspace to create them. You can choose to make the new pool your default pool as well, so it will be what starts in the workspace.

Writing Spark Code in Fabric

If you are writing code in Spark, the two languages you will most likely be using are Python or Scala, but you could also chose Java, Scala, R, or ANSI SQL. Notice that unlike with Azure Synapse, .Net is not included as a language you can use, which is an interesting development. The other thing to keep in mind when writing SQL code in Spark is you will be writing ANSI SQL, not TSQL which you use in Lakehouses and SQL endpoints within Fabric. While TSQL is ANSI compliant, I realized the extent of the differences when trying to use some of the DATEPART TSQL commands as they have underscores in Spark and you use instr instead of TSQLs CHARINDEX. The differences are minor and stackoverflow or copilot can help you with the differences. Just remember that you may not be able use the exact same code as in the SQL endpoint and you will be fine.

Lakehouse Integration and Autogenerated Code

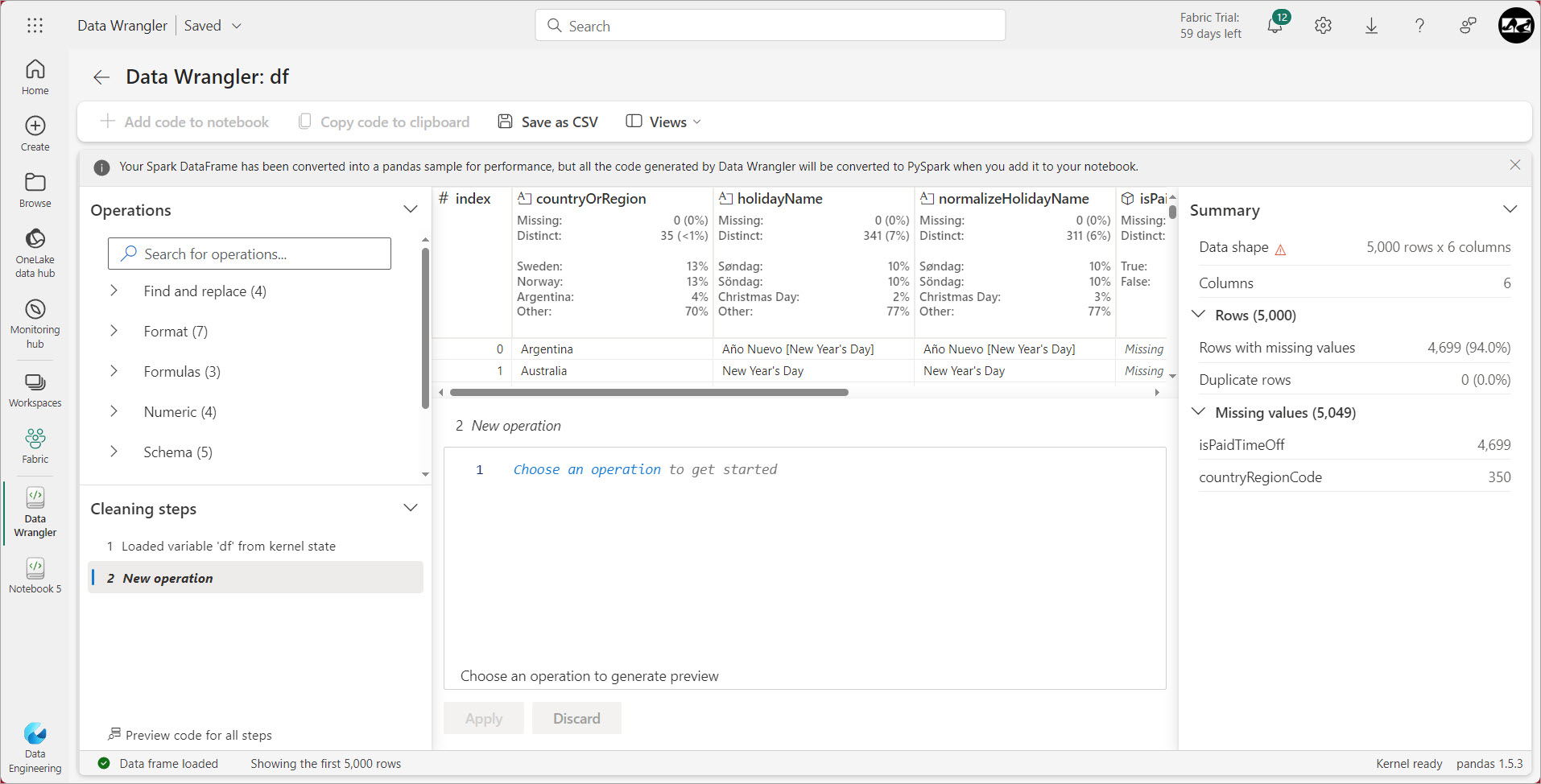

Like all Fabric experiences, the lakehouse is an integral part of the coding experience. When you create a new notebook, the first step is to add a lakehouse. Once it has been added, you can drag and drop elements inside of the notebook and fabric will write the code for you. Literally this code block shown below was created when I dragged over the table publicholidays into the notebook.

Generating code with Copilot in Spark

Fabric in Spark includes a library called chat-magics, and this library includes AI features which you can incorporate in your code if you have copilot enabled in your tenant. There are a few administrative steps you need to include to make that work. To enable copilot the first step is to see if it is supported in your Fabric tenant as it is not available everywhere. Check the list to make sure it is possible. Also you will need to pay for the feature as Copilot is not available as part of the free trial and you will need a Fabric F64 SKU or a P1 capacity to use it. Once you have validated you can use Copilot, you will want to go to the Administrative settings and enable Copilot in your tenant, as shown below.

Once Copilot is enabled and active, you will be able to enable it by clicking on the copilot icon on the far right of the screen. If you don’t see it, click on the ellipse, the three dot menu where Microsoft hides all the cool stuff and you will see the icon in a dropdown menu.

Chat-magics: Copilot spark help

Here are 6 Chat magic commands designed to help you with your code.

%%chat – Designed to provide answers for you regarding items in your code such as variables

%%describe – Provides a summary of the contents of a dataframe

%%code – Explain what code you want written and copilot will generate it for you

%%add_comments – Most people forget to comment their code, and if this is you you can have AI generate meaningful comments for you.

%%fix_errors – Using this command, copilot will try to fix dependance, configuration and resource allocation errors for you.

In my next post I will provide examples of how to use chat magic commands in Fabric.

Yours Always,

Ginger Grant

Data aficionado et Data Raconteur

The first is to connect Power BI to Azure Synapse to explore and visualize data. You can examine your datasets that you have loaded in your datalake with Power BI to help with the analysis of the data either for a data science solution or to determine how you are going to transform the data. For more information on how to do this, check out my previous

The first is to connect Power BI to Azure Synapse to explore and visualize data. You can examine your datasets that you have loaded in your datalake with Power BI to help with the analysis of the data either for a data science solution or to determine how you are going to transform the data. For more information on how to do this, check out my previous